Today, Android announced Activity Recognition's new Transition API. HyperTrack was amongst the few pre-release evaluation partners for the Transition API (hat tip to our friends at Life360 for making the connection). We have been testing the API for over 3 months now and experienced significant improvements. Starting today, the HyperTrack SDK is available in production with support for the new Transition API.

Why activity matters

HyperTrack launched its public Beta in the summer of 2016 because developers worldwide wanted a better way to build apps with real-time location tracking of users. Although we first built this to track business users making trips with commercial value, we learned that location tracking was an integral part of a larger set of product experiences, market spaces and use cases than we had initially imagined.

Thousands of developers started building use cases with HyperTrack, for on-demand, logistics, transportation, salesforce and service fleets. Developers started building use cases in consumer apps for marketplaces, messaging, social media, financial services and health as well.

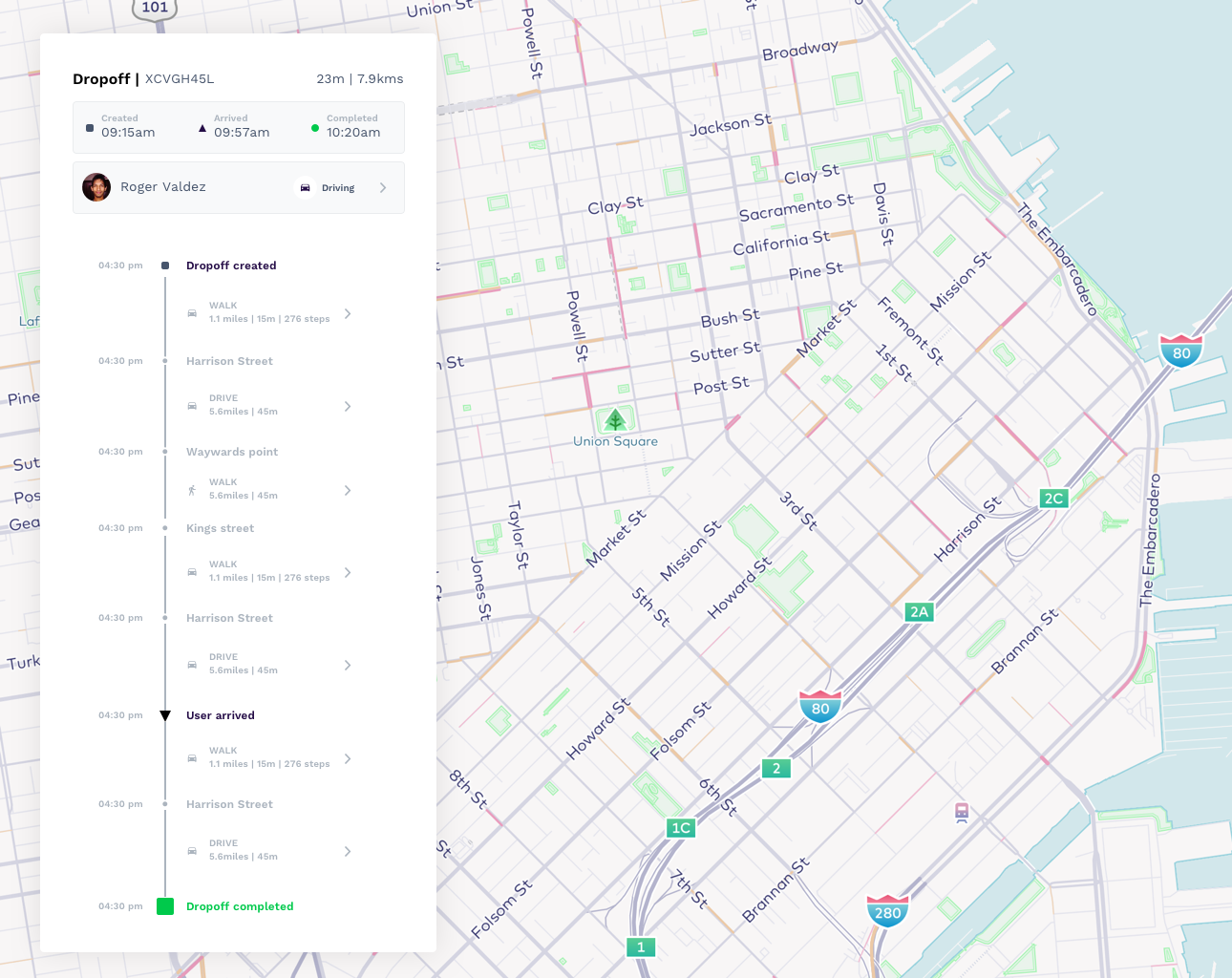

Based on their usage and feedback, it became clear that the places where users stop are as important as the routes taken to get there. Durations and activity at those places are as important as delays and interruptions on the way. Keeping tabs on the activity and device health are critical to answer many questions about tracked users that location alone was unable to answer.

Upgrading the stack from location to movement

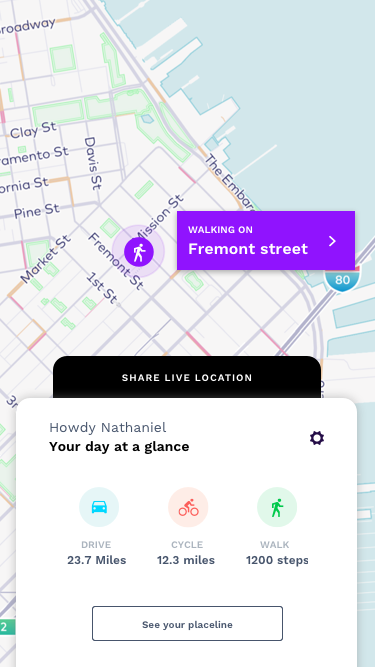

We started adding activity and device health as an integral part of the stack in the beginning of 2017. This first started becoming available to developers through our release in March 2017. Over the course of the year, we upgraded our stack from location tracking to movement tracking, where movement = location + activity + device health.

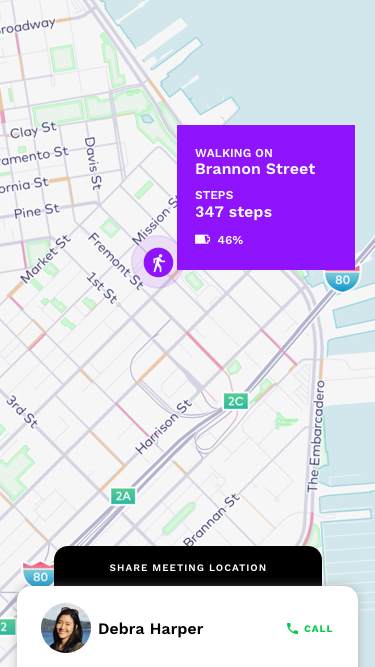

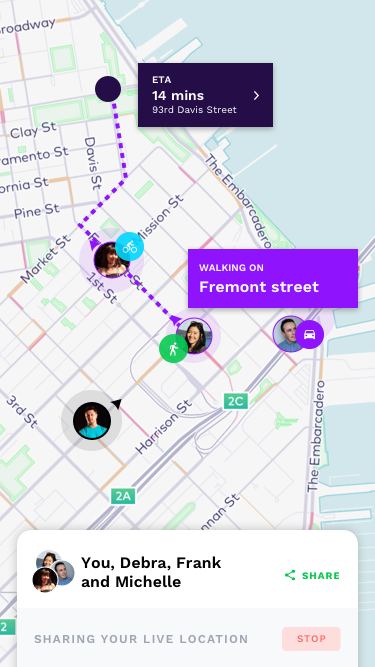

Movement is much more than location. While location tells us where the user is, activity tells us what the user is doing. We have seen that activity-awareness significantly improves battery efficiency, accuracy and real-timeness of tracking. Further, activity-awareness improves the product experience for every use case that you can build with tracking—order tracking experiences, live location sharing experiences, operations monitoring, geofencing, assignments and ETAs involving intermediate stops.

A technology that had been introduced in smartphones for personal health utilities was now being used to power a much wider spectrum of tracking use cases across applications. This is probably the reason why Operating Systems have made significant improvements to these APIs.

Developers worldwide are building various applications and use cases with HyperTrack’s movement stack.

Role of the smartphone OS in detecting activity

The Android OS does a great job of detecting activity using a combination of the the various sensors in the device—accelerometer, gyroscope, compass/magnetometer and pedometer, besides GPS for speed. Compared to iOS, this is much harder for Android because of fragmentation in the device ecosystem. Deployed devices across the world range from $50 to $1,000 phones with a wide spectrum of capabilities.

As activity detection is becoming a mainstream part of app experiences beyond personal health utilities. Arguably, activity is on its way to being as pervasive as location. Let us take a moment to draw some parallels.

- The OS generates location using multiple sensors—GPS, WiFi, BT, cell network. Similarly for activity.

- While algorithms remain proprietary, there is reason to believe that there are machine learning models that make the OS smarter about choosing from the various sources when inferring location. Similar seems true for activity.

- Locations used to be available as updates in fixed time and distance intervals. Fencing was recently added to the API. This makes the OS manage more state to update the app when a user enters or exits a place. Similarly activity was available as updates in fixed time intervals specified by developers. On Android, this changes today! Developers can now receive updates when activities transition from one activity to the other.

The new Transition API

Since Android 4.3 (Jelly Bean), ActivityRecognitionClient is the class for activity recognition in Android SDK. Developers register for requestActivityUpdates with time intervals to sampling data from sensors. The OS periodically wakes up the device sensors to get short bursts of data to infer activity. And then the OS returns user’s activity like walk, run, drive, cycle or stationary. As a result, transitions between activities had inaccuracies and delays.

At HyperTrack, we invested in building activity transition detections based on this raw data coming from OS to our SDK in fixed intervals. Shorter time intervals would be rough on battery drain and longer time intervals would miss the time of activity transition, often the most important moment for real-time app workflows to kick in. This led to latencies in activity detection and reduced confidence in the accuracy of related location events.

With the new release, Android has added requestActivityTransitionUpdates API which allows you to register for the activity transition updates. When the requested transition event occurs, you receive a callback intent. The ActivityTransitionResult can be extracted from the intent, to get the list of ActivityTransitionEvent. The events are ordered chronologically. As an example, if you requested for vehicle enter and exit events respectively; then you will receive the vehicle enter event when user starts driving, and the vehicle exit event when the user transitions to another activity.

Observations from our evaluation

We have been testing these APIs for the last 3 months in our test builds and we have experienced significant improvements along the three primary dimensions of our evaluation.

- Real-timeness: Drive detections that took 4-5 minutes to detect in earlier versions, even with higher sampling intervals, are consistently under a minute now, and with better accuracy.

- Accuracy: The biggest win for us is that we need not run complex algorithms to remove noise from the point-in-time activity data generated by Activity Recognition API. We can rely on Activity Transition API to generate activity segments. The additional noise filtering is now about accepting, rejecting, re-calibrating or merging activity segments rather than generating them from scratch.

- Battery efficiency: The battery usage seems to be lower with the new Activity Transitions API, probably due to the OS managing the periodic sensor wake-ups for detecting transitions. However, we do not have statistically significant observations to conclude one way or the other.

HyperTrack improvements over Transition API

Scenarios that did not work

Activity Transition API has significant improvements over the previous activity recognition APIs. However, it is not perfect. In our testing, we noticed that Activity Transition API could go for a toss in certain situations. In slow moving traffic, a continuous drive might get reported as a mix of IN_VEHICLE, ON_BICYCLE and STILL activities. There are times when the API triggers insignificant WALKING and RUNNING segments (e.g. a short walk to the water cooler or a dash to the restroom).

Defining a transition is subjective

We understand that these are non-deterministic problems, hence the accuracy won’t ever be 100%. The notion of accuracy itself might be subjective depending on what you might want to consider a walk, for instance. In general, the Operating Systems do a good job of generating activity and transitions data but we often find them to be too granular and therefore noisy for direct consumption in use cases. iOS has similar limitations as Android in this regard. At HyperTrack, we have debated endlessly about what would constitute a transition for practical purposes, and not, often throwing use cases at each other. But that’s another story for another day.

Making activity and location work together

Activity data in the OS lacks location context and vice versa. HyperTrack removes noise by processing the two data sets in tandem. We have addressed many of these issues by building a location and activity data filtering pipeline with series of algorithms to address these issues. We believe that resolving these issues invariably requires a device-to-server stack and real-time map context.

Staying up to date

It is hard for app developers to constantly update their apps with the latest and greatest that the OS has to offer. This is especially true when you have a complex stack of features that use activity and location data upstream in a peculiar way.

In fact, we spoke to a number of high scale food and grocery delivery apps around the world and learned that they still infer activity in the cloud using location time series data as the only input. They do not directly use activity data from the OS. There is an understandable fear of the unknown. There is the uncertainty of changing the underlying stack that complex machine learning data models run on top of. ETAs, events and inferences generated from these models might power other business workflows with product experiences and workers’ livelihood depending on them.

Even if developers were to dive deeper into the OS APIs on their own, it might take months of testing and development effort that is not core to the business. HyperTrack solves this by staying current with the latest and greatest that the OS has to offer, while powering the data through formats and abstractions that are consistent across OS versions, devices, programming languages and use cases.

Get started

Want to start consuming the new Activity Transition API with HyperTrack’s magic on top? Get started now and tell us what you think. Make sure that the SDK build version is 0.6.24 with dependency on Google Play 12.0.0 and above.